Believe it or not, software exists that all but casts people with SUD to the curb

This post is reprinted with permission from one of TreatmentMagazine.com’s go-to blogs about addiction, treatment and recovery: Recovery Review.

By William Stauffer

People like me in long-term recovery can face horrible treatment if it becomes known we have had a substance use disorder. This is particularly true when we may need controlled substances as part of our legitimate medical care. There are now algorithms being used to scan our personal and medical data to see if we may be drug-seekers. If you get identified as a drug addict, you are likely to get treated poorly, kicked out, and not helped. If we want to get more Americans into sustained recovery, we need to start treating people more fairly in our medical care systems. This must include fixing how we identify and provide care to persons with suspected addiction in our hospitals and doctors’ offices.

A number of years back, I had a dental emergency. I have had a few of those in my life, unfortunately. I had a procedure, and the antibiotics the dentist gave me were not strong enough. The infection came roaring back with a vengeance. The side of my face looked like I had a golf ball in my cheek. It is the most pain I have ever experienced—a 10 on the pain scale. This occurred while I was visiting family in western Pennsylvania. It got really bad in the middle of the night. I went into a rural hospital and asked for help. The staff took turns coming into the room to look at me. I was a sight, and I am sure everyone wanted to see the patient who looked like a squirrel with an acorn in his mouth.

They wrote out scripts for a more powerful antibiotic and gave me a strong opioid to provide some relief. I recall them mentioning that it was addictive and asking if I knew that there were risks. I told them I was a clinician who worked in addictions, and I did know that the meds I needed that night were addictive. I did not tell them I was in recovery. I was afraid that they would leave me in excruciating pain. I have experienced horrible treatment at the hands of medical staff who became aware I had a history of substance use issues. It does not even matter that I am in recovery. I have had hundreds of patients recount similar tales of unprofessional care at the hands of doctors and nurses. I could not tell them I was in recovery; I did not want the same to happen to me on this night with that agonizing pain.

Society has a stigmatized view of people like me, that any use of a medication results in a relapse. It is simply not reality. It just means we need to be a little more cautious and practice good self-care.

I got the meds and went to stay with my family. I took the meds and switched over to an NSAID as soon as the antibiotics began to work. I let my family know I was taking an opioid. That is my standard protocol for the handful of times in 36 years of recovery when I have needed to take medicine with an addictive potential. The meds were what I needed in this instance. I got through the experience fine, with zero impact on my recovery.

Society has a stigmatized view of people like me, that any use of a medication results in a relapse. It is simply not reality. It just means we need to be a little more cautious and practice good self-care. We are just as capable of doing so as a diabetic is capable of navigating a day with dietary risks.

I have been thinking after reading a journal article from the Annals of Emergency Medicine, “In a World of Stigma and Bias, Can a Computer Algorithm Really Predict Overdose Risk?” Bamboo Health has developed software that gathers people’s data to determine an overdose risk score called NarxCare. It uses an algorithm, and there are reports emerging that far too often patients with legitimate medical problems end up being scored as potential drug addicts. They are then treated like pariahs by medical professionals—not offered help, but kicked to the curb and treated like criminals.

As this article notes, NarxCare gathers information such as criminal records, sexual abuse history, distance traveled to fill a prescription, and even pet prescriptions to assign risk scores to each person. Minorities score higher, as our criminal justice system has historically targeted Black, Indigenous and people of color for drug crimes and arrested them at higher rates than whites. Women who have more documented sexual trauma than men get scored higher. How does addiction treatment or self-identified recovery score on the algorithm? That is proprietary.

A recent and quite comprehensive legal review, published in the California Law Review, “Dosing Discrimination: Regulating PDMP Risk Scores,” by Jennifer D. Oliva, Associate Dean for Faculty Research and Development, Professor of Law, and Director, Center for Health & Pharmaceutical Law, Seton Hall University School of Law, notes:

“NarxCare risk scoring likely exacerbates existing disparities in chronic pain treatment for Black patients, women, individuals who are socioeconomically marginalized, rural individuals, and patients with complex, co-morbid disabilities and OUD.”

Professor Oliva has found that the software flags people who are rural and travel far for medical care, or who pay cash and use multiple payment methods. Such payment methods are often used by people who are uninsured or underinsured. They scramble to try and find ways to pay for their medication.

If your sexual trauma history gets into your medical record, you may end up not being able to obtain the same medical care as others, as you could get flagged as a potential drug addict at risk for overdose. As I noted, the software is proprietary—not open for validation, and not regulated. Oliva notes in her detailed legal review of the software:

“There are no other examples of automated predictive risk scoring models created primarily for law enforcement surveillance that are used in clinical practice. This is likely because such cross-over use of risk assessment tools is ill advised. That stated, to the extent that clinicians do use PDMP risk scores to inform or determine patient treatment, PDMP software platforms ought to be subject to the same regulatory oversight as other health care predictive analytic tools used for similar purposes. The significant questions raised about PDMP risk score accuracy and such risk scores’ potential to disparately impact the health and well-being of marginalized patients demand immediate regulatory attention.”

An article at Wired.com describes a woman who was cut off from services from her primary care provider. Her dogs were prescribed opioids and benzodiazepines. That gave her a high score for potential addiction. She became a person to be gotten rid of, not helped. She became a medical care pariah. She got the drug addict treatment; she was shown the door and terminated from care. This proprietary software influences medical care for millions of Americans. I found that Rite Aid uses it in Pennsylvania and 11 other states, as do Walmart and CVS.

As noted above, it appears that instead of being used to help get persons who are at risk for addiction the care they need, the software is often used to remove persons from care. I ran across countless stories where that was the outcome. This may stem from fear that doctors and pharmacists have of DEA sanctions. As persons on such medications face withdrawal as they are sent to the streets, it may actually result in increasing the overdose risks of patients it identifies as being at high-risk.

As addiction is a medical disorder, we should be providing medical care to a person with a substance use disorder as we would to, say, a diabetic—without judgment and with the same care and concern as any other patient.

When it is in error, there is little recourse for the patient. It is highly unlikely the issue will be corrected. Once you get flagged as a drug addict by this unvalidated proprietary software, good luck clearing it from your electronic health record. Persons in recovery have every reason to fear how the flow of such information will influence their treatment. We have a system of care designed to find and fail us. You have the right to request that something be removed from your electronic health record. Your medical provider is required to respond, but they can just say no. This study, done in 2014, found that if you requested a change to your medical record in regard to drug-seeking behavior, your request had less than a 10% chance of being approved. Marked for life. The letter A for “addict,” written into your EHR for eternity.

As addiction is a medical disorder, we should be providing medical care to a person with a substance use disorder as we would to, say, a diabetic—without judgment and with the same care and concern as any other patient. We do not do so in America. Being treated like a drug addict in America means being treated like an outcast. A member of an unclean caste. This says a lot about how far we have to go in respect to proper care for addiction in America.

With what other medical condition would the use of unvalidated, proprietary software be used to guide medical care? Hundreds of thousands of persons like me across America are forced to think about medical care bias against us every time we seek help. We must change how we treat people with substance misuse issues and those of us in recovery. We need to be cared for respectfully and with compassion, just as we expect for any other medical condition.

Last year, I wrote this piece, “Take the Drug Addicts Out to the Hospital Parking Lot and Shoot Them.” I suggested then that we need stronger privacy laws and that we must hold medical professionals accountable for discrimination in the treatment of persons having or suspected as having a substance use disorder. We need to have zero-tolerance policies on discriminatory treatment of persons with a substance use disorder written into every hospital policy. They should include strong administrative sanctions for all staff who discriminate against us and everyone who witnesses it and fails to report it. Put such policies in place in every medical institution in the country.

When we experience no shame, no negative judgment, and no disparate care, we will have arrived at where we need to be.

How can we get more people into recovery in a system of care that acts so punitively towards us? If we want to increase the number of Americans in recovery, we must improve the care provided to persons with substance use disorders. We need to ask hard questions about how such algorithms impact a person who has or is suspected of having a substance use disorder. We must receive the same standard of care as everyone else. We must stop medical care bias against us.

We will know when we have a healthcare system that works for persons like me with substance use disorders when people like me do not have to be afraid of being identified as having a history of substance use disorders in our medical care systems. When we experience no shame, no negative judgment, and no disparate care, we will have arrived at where we need to be. A day when we no longer live in fear of these algorithms of discrimination. We have a long way to go to meet that standard, but we must work toward it if we are to actually help the millions of Americans who need help with a substance use disorder.

This Recovery Review post is by William Stauffer, who has been executive director of Pennsylvania Recovery Organization Alliance (PRO-A), the statewide recovery organization of Pennsylvania. He is in long-term recovery since age 21 and has been actively engaged in public policy in the recovery arena for most of those years. He is also an adjunct professor of Social Work at Misericordia University in Dallas, Pa. Find more of his writing, as well as a thought-provoking range of articles, insights and expert opinions on treatment and addiction at RecoveryReview.blog.

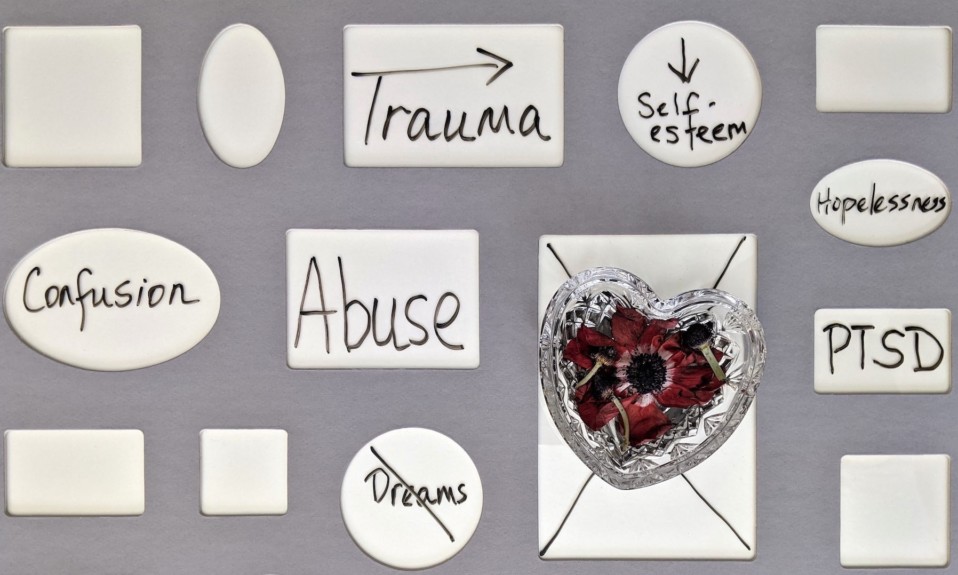

Top photo: Markus Spiske; bottom photo: Keem Ibarra